Let’s say you’re testing a new look for your website. You think it’ll increase conversions, but of course it could do the opposite, and reduce conversions. How do you decide whether or not to launch the feature?

As you can imagine, this is a very common problem, ranging from website look-and-feel to pricing ($20/month vs. $100/year) to product features to ranking questions (which songs or search results do I show?) and so on. And because it’s so common, it’s been very well studied. Many of the core scenarios have been worked out in academic papers and in open source code.

But even though it’s well-studied, it’s not necessarily well-understood, especially at the front lines of day-to-day decision-making. While this won’t be an exhaustive review of all kinds of statistical decision-making, or a deep-dive into any particular part of it (like Multi-Armed Bandits), below I’ll list some of the principles that keep coming up:

I. There is no default.

Let’s take the example above. Your website today certainly has something up there. So that certainly feels like the default, and legacy tools like NHST (null hypothesis significance testing) and psychological quirks like loss aversion reinforce this feeling. But, logically, the decision is just as much to keep the existing landing page as it is to replace the landing page.

You might be thinking “But wait, there are real costs to changing the landing page. Engineers have to test and deploy the change, users may lose familiarity with the site, etc.” And sure, those switching costs are real and should live in the “minus” column for making a change, but once all the costs and benefits are incorporated, there shouldn’t be any particular pre-existing bias for one option over another.

Tools like NHST are the real culprit here. Let’s take this somewhat contrived example:

You show your existing landing page to 1000 people, of which 140 convert.

You show a variant to 100 people, of which 20 convert

If you ran this through a logistic regression, this would not be significant at the 5% threshold, or even 10%. (It’s at 10.7%) Yet, if this is all the data you had (and there were no switching costs), making the change to the variant is absolutely correct.

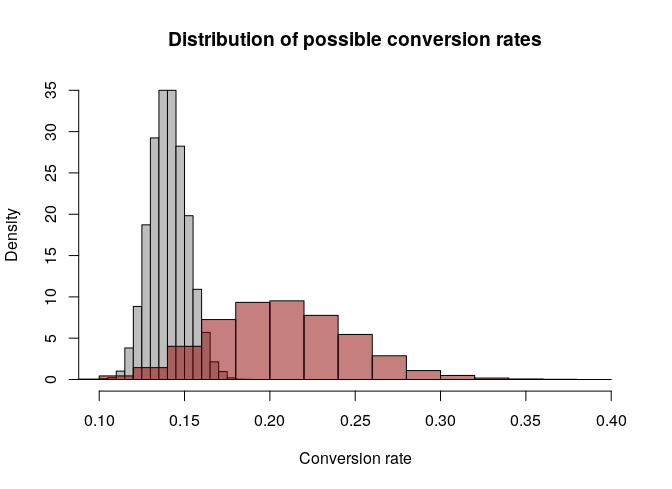

Visually, the distribution of possible conversion rates looks like this:

In my simulation, the variant has a higher conversion rate than the baseline 95% of the time, and even among the 5% of times the baseline is higher, you’re giving up 1.4% on your conversion rate, on average. Contrast this with the almost 7% conversion rate you lose when you choose the baseline, and are wrong.

This story is too common: making an obviously worse decision just because the option that “wins” statistically failed to “prove” it, without requiring the same level of proof from the baseline.

II. Get your errors right

When building a statistical model, the most important step is to decide on the “loss” function, which determines how large of an error the model made for each data point. (This is how ML folks talk about it, I prefer to think about it in terms of the process that generated the data — its response distribution — but tomayto tomahto). E.g. when modeling a binary outcome (as above) you may choose a logistic loss; a count process, poisson; a survival model, weibull; etc, etc.

Choosing the wrong loss for your data will lead the model’s predictions to poorly fit the data.

But once you’ve modeled reality, you then need to model your business. And it’s almost always the case that different kinds of errors have different costs. Take for example a “hits” business, like a film studio. Let’s say it takes $100m to make a movie; a good movie can return $2 billion in profit; a bad movie makes zero; and with current practices (e.g., no model) there is a 7.5% probability of making a good movie.

Now you have a model, and it predicts whether or not the movie will be a success before you make the investment. Most classifiers produce (or can produce) a probability estimate, not just a 1 or 0, but unfortunately most statistical software and workflows are also set to collapse the probabilities back to a 1 or 0 with a 50% threshold.

But 50% rarely makes sense: let’s further assume your model is perfectly calibrated; e.g. if it returns a probability of 12.5% for the movie being a success, then exactly 12.5% of the time the movie would be a success (and so on for other estimates). What should your threshold be for making a movie?

Well, your breakeven probability is 100m/2bn = 5%. If you only make the movies with a greater than 50% probability, you are throwing away approximately 22% of the potential profit (this exercise is left to the reader, but basically compute the profit if you use 5% and likewise at 50%, and take the difference).

The issue here is that the cost to making a movie that’s a dud is $100m, but not making a movie that’s a hit is twenty times that, at $2bn. The errors have very different costs; it doesn’t make sense to treat them the same, which is what’s you’re doing implicitly when you use the default 50% threshold.

What this illustrates is that “getting your errors right” means two things: first, getting your model’s error’s right, and second, getting your decision’s error’s right.

III. Make the decision sequential, even if it’s not

Almost all decisions can be made to be sequential vs. all-or-nothing in some way. Smaller companies can get away with simply changing something as important as their pricing from one day to the next, because no one will notice. Bigger companies with more attention on them often test things out one market at a time before rolling changes out globally.

The value to making decisions sequentially is often known as option value -- the ability to retain options even as you make decisions. In the example I led with, the company made the decision sequential by running a test first, and using the results of the test to guide their decision.

Getting creative about finding ways to run tests can improve decision-making enormously. Any kind of test that gives you data can improve your decision. The logic of why this works and a toy example are laid out in “How much is data worth to you?”