The other day I posted this twitter thread:

Which, for my account at least, seemed to generate some numbers. My main point was this: “AI” gets a lot of hype, but there are other things going on in data science that may change the world just as much.

When I say “AI”, I mean the common usage of the term, which is almost always synonymous with deep learning, or the use of deep neural networks. This includes things like:

Machine translation (taking sentences in one language and translating them to another, as Google Translate does)

Generative adversarial networks (generating persuasive simulacra of paintings, songs, etc), etc

Self-driving cars

Deep learning can do eye-popping things, and since it often works with images, text, or audio, the power of these methods is easier to see, often quite literally. So it’s easy to see why AI would take the most mindshare among the general public.

But, perhaps less visibly, two other revolutions — explosive proliferations in new technologies — are taking place, each with the potential for as much impact on the world as AI does.

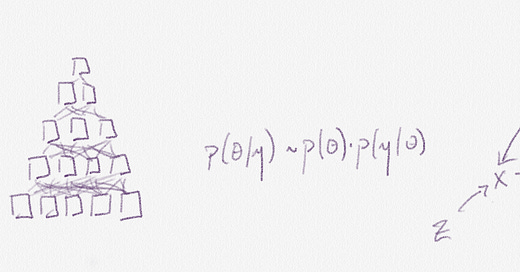

Bayesian inference

Bayesian inference applies to models where the modeled parameters and their uncertainties are of interest to the analyst. Modern deep learning models have so many parameters (in the millions, billions, or even trillions) that no single one of them matters. When you’re using Bayesian inference, it’s often only one number that matters, so it’s critical that you get that number right.

For example,

You may be estimating the probability that a certain ad variant is the best

You may want to know the number of people in a given media market that haven’t yet made up their mind about who to vote for

You may want to know the reproductive coefficient for a widespread airborne disease

Crucially, part of getting that number “right” is understanding what your uncertainty about the number is. Enter Bayes.

In the past, most practitioners were limited to “off the shelf” models, since each statistical model had to come with its own bespoke estimation procedure, worked out one by one in academic journals and implemented one by one in software packages. If your problem didn’t fit with a given model, you were outta luck. It was possible, perhaps, to arrive at the “most likely number”, but getting the uncertainties right required someone else to work through complex derivations.

That’s changing; it’s getting easier and easier to write custom models. Two algorithms: Hamiltonian Monte Carlo and Automatic Differentiation Variational Inference, along with (relatively) user-friendly software interfaces (Stan, PyMC3, Turing, Tensorflow Probability) have brought almost infinite flexibility to practitioners.

Taking the last example above — as part of the rt.live team, the model that we developed to infer R(t) would have been impossible to estimate less than a decade ago.

Causal inference

When we care about the “internal” parameters, we often — perhaps usually — care about causation (if I manipulate this variable, will this other variable change?). This is extraordinarily difficult in practice, and results that don’t take account of this are very easy to misinterpret, despite the ubiquity of the phrase “correlation does not imply causation”.

Without either (a) running an experiment or (b) doing fancy things to control for biases, statistical models only produce correlations, not causal effects.

This (rightly) led to a period where experimental designs were privileged over statistical methods, in which the causal effect of interest is isolated by manipulating experimental conditions. Where experiments can be done, they are the most reliable way for estimating causal effects, but often — perhaps usually — experiments cannot be done, and so we are left with option (b): “doing fancy things”.

The revolution here is that these fancy things are becoming ever less fancy and easier to use, in ways that novices can understand and use, without loss of fidelity. The most famous and most important toolset is a set of visual approaches (literally, you draw arrows between variables — try it here), along with an algorithm (the “do-calculus”) that reduces these arrows to a formula that can be estimated with the data you have.

What this means in practice is that all sorts of things that were previously impossible to estimate can now — or, soon — be estimated. These “things” have the flavor of “if I do X, what will happen to Y?” (interventional), or “if I had done X, what would have happened to Y?” (counterfactual). Notice that each of these things are different, fundamentally, from the data you have without an experiment, which is “I did X, and Y happened” (observational).

Most business and policy decisions have the flavor of the first example “if I do X, what will happen to Y”, because most of the time the analysis is supporting some decision. If this seems banal, it’s only because we have been ignoring the fundamental challenges of actually estimating these values from observational data in the past, and are only now gaining the tools to actually do so.

What’s next

Of course, these revolutions overlap quite a bit, so hard boundaries are impossible to draw. There are bayesian neural networks, and there are neural networks designed to estimate causal effects.

But, there seems, to me, at least, to be more overlap between the revolutions in bayesian and causal inference than there is between either and deep learning. The main reason is that both idealized workflows start from the same place, which is to write down how the data that you observe was generated — at least qualitatively.

My prediction, then, is that the boundaries between these two forms of inference, and the tools used on either end, will continue to blur, until a future author of a post like this would consider lumping them into one bucket.